Finance Dataset – Companies from all over the world today use data to improve their offers. Although many organizations come from internal sources such as CRM software, ERP systems, marketing machines, database and other repository, many people also turn to the opening of data.

Open data are basically large data sets available to everyone on the Internet – this type of data can be collected for data collected by private enterprises by government agencies. And lately, businesses have understood its importance.

Finance Dataset

The insurance sector is one of the industries to use the best use of open data. The data requirement is high in insurance, and open data is to a certain extent. Open data can be used to explore the customer patterns of demographic groups, find new opportunities for people in different financial situations, and much more.

Where Does Chinese Development Finance Go?

In this article we call the best financial and economic open sources of data that someone can use:

Data.gov is an American government website that provides access to high -value machine -readable data sets to the various domains generated by the federal government executive branch. The website was launched at the end of May 2009 by the then federal CIO, Vivek Kundra. It is nourished by two open source applications, Ckan and WordPress, and publicly developed on Github.

The Open Government Data (OGD) Platform, India, Data.gov.in, a platform to support the open data initiative of the Indian government. A joint initiative of the Indian government and the US government to facilitate access to the Indian government in a machine form.

In addition, the entire project is packaged as a product and made available in an open source code to implement countries worldwide. The full product can also be downloaded from Github.

Comprehensive Guide To Financial Datasets For Ai And Machine Learning

The European Union (EU) institutions and other EU bodies began in 2012 after the European Commission decided to reuse Commission documents 2011/833/EU. The most important motive is that people reuse this information for both commercial and non -commercial purposes.

The Financial Times is one of the world’s leading intelligence organizations. And while the Financial Times is primarily about providing a wide range of information, news and services for the global business community, it is one of the most appropriate open data sources (market data) for world markets.

By combining daily market data for traditional data with historical values, GDF generates a complete, unprecedented data area – the most comprehensive, historical economic and financial information is available everywhere. With a free subscription, each all has access to GFD’s full data set and research to analyze large world markets and farms.

The IMF, established in 1945, is directed and accounted for by 189 countries, which have almost global membership. Although the primary goal is to ensure the stability of the international monetary system, the IMF also publishes data on international finances, debts, foreign exchange reserves, raw materials and investments. IMF data sets are one of the sources that provide a clear insight into global economic prospects, financial stability, fiscal observation and more.

Top 10 Financial Data Providers In 2025

The RBI Data Warehouse, launched by India Reserve Bank, is a platform that publishes data on different aspects of the Indian economy. The data is also presented primarily with the formatted reports. Be it money and banking, financial markets, national income, savings and employment, as well as for others, the RBI Data Suit House that you have covered.

The World Bank’s open data platform offers open data on demographic data and many economic and development indicators from all over the world. The World Bank Development Data Group coordinates the statistical and data versions and maintains a number of macro, financial and sectord mats. As we talk about data collection, most of the data from the statistical system of the Member States come and the quality of the global data depends on how well these national systems perform.

The Harshajit writer / blogger / vlogger. A lover of a passionate music whose talents range from dance to cooking. Soccer runs in his blood. Like literally! He is also a developed technician and loves to repair and improve things. If you don’t write or make videos, you may read books/blogs or watch videos that motivate her or learn new things.

The next step in start is to start two fully functional satellites this year, with the effort to build computer computer science.

Tagnifi Debuts Standardized Footnotes Dataset At Battlefin Miami

AIM publishes it every day and believes in quality, above quantity, the sincerity of spinning. We offer a wide range of brands and target definitions to facilitate the distribution of the brand.

Aim Brand Solutions, AIM’s marketing department, various content such as documentaries, public works of art, podcasts, videos, articles and many more have been specialized to effectively tell stunning stories.

Adasci Enterprise Training Program offers a unique opportunity for Generative AI to authorize, retain and promote your talent

With MachineHack, you can find not only challenging developers with challenges, but also involve the developer community and internal workforce by offering hackers.

Pdf) Entrant: A Large Financial Dataset For Table Understanding

AIM Research provides a series of annual reports on AI and data science that covers all aspects of the industry. Ask for individual reports and target assessments for a study on the topic of interest.

Disease into AI and business conferences that have been adapted to your role, which aims to increase your performance and authorize you to achieve the organization’s important goals.

The leading Dei summit of the 2025 rising India in Tech and AI can be immersed in feasible strategies, challenges and innovations that encourage inclusion in this study. and the methods designed to assess the accuracy and privacy of our methods and data. The temporary, tidy character of time series can help to detect and predict future trends, which are surprisingly enormous for business planning and investment. Due to the rules related to individuals and organizations and the security risks related to the data part of the data, most of the value available from it is unattainable. Here, the work shows that synthetic data can promote this emptiness while maintaining privacy. By generating synthetic time series data that can be generalized and divided between different teams, our financial institutions can give a competitive advantage and power to explore a whole new world.

Developers can test our methods by opening the example of the Colab Notquar by clicking on “All Run” and entering the API key to complete the complete attempt or following the following three -step process!

Finrag Dataset: Deep Dive Into Financial Report Analysis With Llms

For this experiment, the Bank’s Data Science Group has provided a time series data set, which contains information about the client account balance over time, which contains 6 columns and about 5500 lines. There is a time component in the “date” field and a trend account balance that must be kept above each “district”.

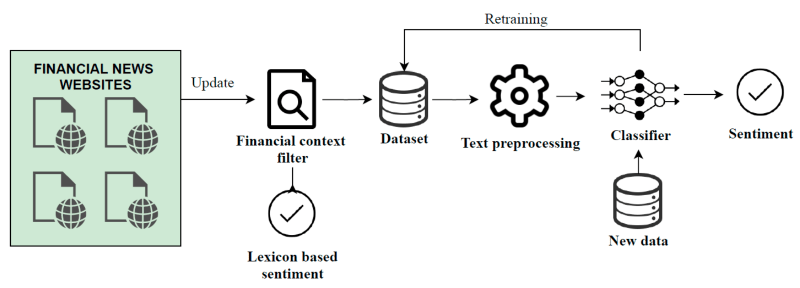

Fight this step creating a simple pipeline that can be used to identify the time series data set and then create a synthetic model that generates the same size and shape artificial data set. Below is the pipeline diagram, which is used to create and test the time series.

To identify the data, we use the API Transformation API with the following configuration policy to randomly displace the dates and the floating points of the data. To ensure consistent shifts in each district, the “perimeter_ID” identifier is defined as a seed value. This step offers a strong initial guarantee that a synthetic model does not memorize the repeated data values.

We then train a synthetic model for the De-Identified Training Data set, and then use the model to create a synthetic data set that has the same size and shape as the original data. To do this, we use the “smart sowing” task, which asks the model below with “Date” and “District_ID” and effectively asks the model to synthesize the remaining data.

Cost And Giz Launch New Climate Finance Dataset For Mitigation And Adaptation

Then we need to test the accuracy of our model, which begins with a quick sanity test, comparing the distribution of district time series in the data set. Here, a synthetic quality meaning (which can be seen in the notebook) is useful to assess the capacity of the model to learn correlations in the data. Below you can see that our levels are in line with the original data set. Gluten!

In order to deal with the difficult problem of evaluating the quality of synthetic time series, the Bank team has an Arima model for synthetic and original data sets. This made it possible for us to measure how good the seasonality of each data set in the data is. (Note: Our POC notebook uses the Sarimax model in the Stat Models package that includes a seasonal component, similar to the modified Arima model used by the bank team.)

We used the perimeter_ID = 13 to train the Sarimax models, and an experiment was made at all available target variables (generated four target variables). We used to assess the model’s forecast accuracy, ie the average square error of the root (RMSE) last year in the data set (1998). The available available